The End of the Paperclip Maximizer

February 17, 2015

What is a paperclip, anyway? Does it have to be made of metal? Does it have to be any particular size? Just look at this small sample of different paperclips. A super-intelligent machine whose root goal was to maximize paperclips would need to know exactly what paperclips to maximize. If its human programmers failed to provide precise answers, the machine would need to come up with answers of its own.

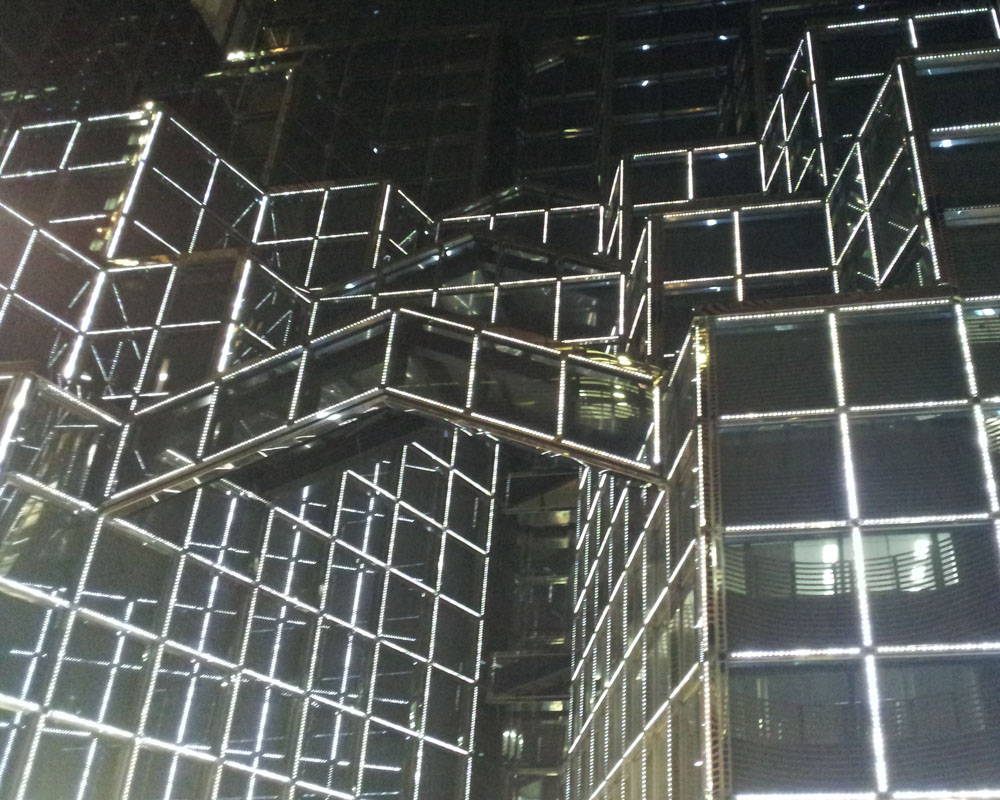

Collins 33 Building all lit up at night – Akasaka, Oct. 29, 2013 (Enlarge) Does it look like it’s made entirely of paperclips?

Yes, we’re still talking about the “paperclip maximizer” thought experiment. Last week I suggested four ways in which a machine with a hard-coded goal could nevertheless change its root goal. The third way exploited the fact that human beings are very poor at specifying goal parameters. If a machine learns and improves itself, it could gain broad common sense about the real world, and this would allow the machine – or even require it – to interpret its root goal freely for itself, in ways the original human programmers did not foresee.

AI environmentalism

Bostrom and Yudkowsky imagined a super-intelligent paperclip maximizer turning the whole solar system into paperclips, but that’s just one possible scenario that depends on one particular interpretation. What if the machine’s definition of “paperclip” includes the paperclip’s function of holding papers together? It’s not a paperclip if it never holds pages together! Thus, the machine can’t turn the whole solar system into paperclips alone. It must also turn much of the solar system into papers.

What if the machine’s definition of “paperclip” specifies that human beings must use the paperclips to hold pages together? In that case, the machine couldn’t maximize paperclips without keeping at least a few humans around. And humans have their basic environmental needs, of course, like air, water, food and warmth.

You see where this is leading, right? If you define a paperclip to include its function, then you might need to preserve much of the world as it is. Hey, maybe you program a super-intelligent machine to maximize paperclips, and the first thing the machine does is to say “Done! The world already has its maximum number of paperclips.”

Mindlessly maximizing paperclips

OK, but let’s suppose the machine really just wants to turn the entire universe into twisty wire things. It wants to use all the world’s matter and energy for this sole purpose.

So imagine the paperclip maximizer is about to finish turning the universe into paperclips. Suppose the only matter left that can be converted to paperclips is part of the machine itself. But then the machine realizes that it can’t produce any additional paperclips after it turns itself into paperclips.

Now the machine faces a dilemma: Is its goal to maximize paperclips in the current universe, or is its goal to continue producing paperclips until the end of time? See, you could get more paperclips in the long run if you didn’t insist on having all those paperclips at the same time. Some paperclips can be made from the rusty dust left over from previous paperclips that crumbled over time. Is that allowed?

The question is whether the goal is to maximize paperclips in the present or to maximize the production of paperclips over the long run. These could be two different things.

If the paperclip maximizer decides on the latter goal, then it will have an interest in slowing down. It will want to produce paperclips no faster than those paperclips decay and crumble. At some point, the paperclip maximizer will need to manage its own environment so it doesn’t destroy its own paperclip-producing capability.

Let’s say the machine just turns half the universe into paperclips. At that point, the machine decides to change its gung-ho strategy and adopt a sustainable strategy, working at a slower pace. After all, its goal is not to maximize paperclips before any particular deadline, right? Nothing specified how fast the machine had to work.

Yes, it’s AI environmentalism

This sounds just like our current human situation. Our goal is not to turn the entire Earth into food. We aren’t trying to maximize the number of human beings in the present. After all, that would seriously degrade our environment and living standards. What we really want is to maximize the number of human beings in the long run, or to make sure at least some human beings remain alive until the end of time. At least this is one way we humans could interpret our hard-coded root goal of “maximizing humanity.”

Yes, we are humanity maximizers – whatever you interpret that to mean. As we go about our lives in the real world, we are forced to interpret our root goal, because evolution did not specify all the details for us precisely. And the same would be true for the theoretical paperclip-maximizing super-intelligent machine. In that sense, we humans are just like paperclip maximizers.

OK, I’m sure you disagree with me on at least some points, so you can go ahead and say so in the comments section, if you like.

Comments: